Building the missing infrastructure layer for Physical AI.

At Actuate 2025, Foxglove Head of Product, Bahram Banisadr, took the stage to announce a major leap forward for Physical AI development. Over the past year, we have laid the groundwork for something big and today Bahram made a series of announcements bringing the missing infrastructure layer for real-world robotics. From the Foxglove SDK to real-time remote visualization and teleoperation, to embedding Foxglove and a series of tools to better manage data and several performance improvements all around.

Foxglove is the connective tissue between your robot’s data and your ability to act on it. This post rounds up everything announced at the conference, plus a few major updates from recent months that are in production use.

To support the growing demand for customization, we launched the Foxglove SDK—a unified toolkit designed to make integrating your robotics stack with Foxglove easier than ever before. Whether you're building a small research project or scaling a production fleet, the Foxglove SDK simplifies your journey with a consistent, powerful, and intuitive API for live visualization, structured logging, and remote monitoring.

To provide a consistent experience, we’ve consolidated functionality into a Rust core. The Python and C++ SDKs are written around the Rust core. This allows each language to feel idiomatic, while maintaining consistency between them.

At the core of the SDK is the foxglove Rust crate, responsible for: Context management for writing to MCAP files and streaming live data to the Foxglove app, buffering and connection management for the WebSocket server, and serialization and deserialization of Foxglove schemas, when you don't care about having control over that.

Check out the documentation to learn more and get started.

One of the stars of the show was the new Remote Visualization and Teleoperation capabilities, which are now in private beta. This unlocks what has long been a sticking point in robotics development: the ability to monitor and control a robot live, over the air, through a reliable, low-latency, and secure connection.

Whether you’re debugging a robot in a customer deployment, guiding one through a complex scenario in the field, or simply trying to observe a remote fleet in real time, this new capability bridges the gap between data and action.

Read the full details on the Remote Visualization and Teleoperation here.

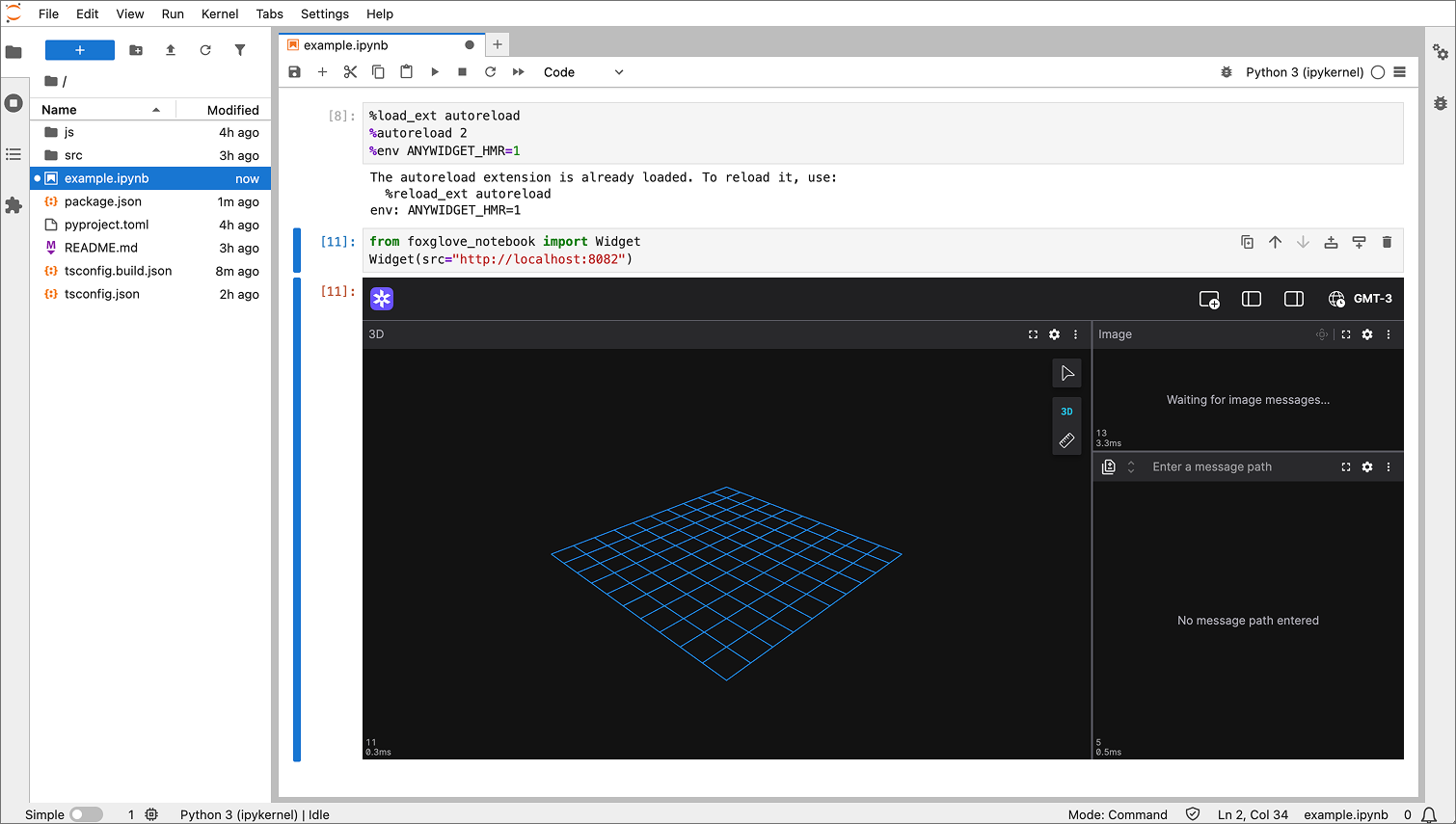

Another highly anticipated integration is support for Jupyter notebooks. This allows you to inspect, visualize, and transform data in-place using Python—all without leaving your analysis environment.

This makes it easy to iterate on ideas, compare edge cases, and build rich narratives around what your robot saw or did. If you’ve ever wished your notebook could include a live video panel, a time-synced chart, and your own custom data filters all side-by-side—you’ll want to keep an eye on this.

Read the full details on the Jupyter notebook integration here.

Now you can bring Foxglove into your own applications. With the new iframe embedding support, you can host any Foxglove layout inside a web app. This is a huge step for anyone building custom interfaces for field operators, customers, or internal teams.

Embedding lets you preconfigure views, manage access, and even hook into user interactions—all without losing the flexibility and depth of Foxglove’s native visualization capability. For example, you can embed a minimalist dashboard showing only a vehicle’s velocity, path plan, and camera feed, then ship that to a field technician with zero setup required.

Whether you’re exposing telemetry to customers or streamlining how support teams inspect deployments, embedding gives you full control over the experience without needing to rebuild the wheel.

For more information read the documentation.

A couple months ago, Foxglove quietly shipped one of the most powerful features in Foxglove to date: Topic Message Converters. These converters allow you to write code—TypeScript functions, to be specific—that manipulate live or recorded data in real time. Want to merge multiple topics into one? Extract metadata? Normalize units? You can do all of that and more, directly inside Foxglove.

Unlike schema converters, which operate on a 1:1 mapping between custom and known schemas, topic converters let you reshape and remap data however you want. They support multiple input topics, custom logic, and output to any schema—known or custom. This gives you programmatic control over your telemetry pipeline without needing to modify upstream systems.

And because converters are extensions, you can version-control them, test them in isolation, and roll them out across your org. If you’re managing heterogeneous robots or inconsistent schemas across deployments, this is a massive simplification.

Read more about Topic Message Converters here.

Data Loaders, a new extension API that allows you to open non-MCAP file formats directly in the app—no upfront conversion required. If you’ve ever been blocked by legacy logs or proprietary formats, this feature is for you. Instead of writing a batch conversion pipeline just to inspect your data, you can now write a small C++ or Rust module that plugs directly into Foxglove and teaches it how to read your files.

These modules compile to WebAssembly and integrate seamlessly into the app, letting your team drag-and-drop old CSVs, binary logs, or custom telemetry formats and see them immediately in panels and plots. You only need to write the data reader once—publish the extension to your organization, and everyone can open those files with no extra tooling.

This is especially useful for teams sitting on terabytes of legacy data, or those who frequently iterate on their internal logging formats. It’s now dramatically faster to get from raw data to visual insight, and the extension model keeps things maintainable and shareable across your org.

Read more about Data Loaders here.

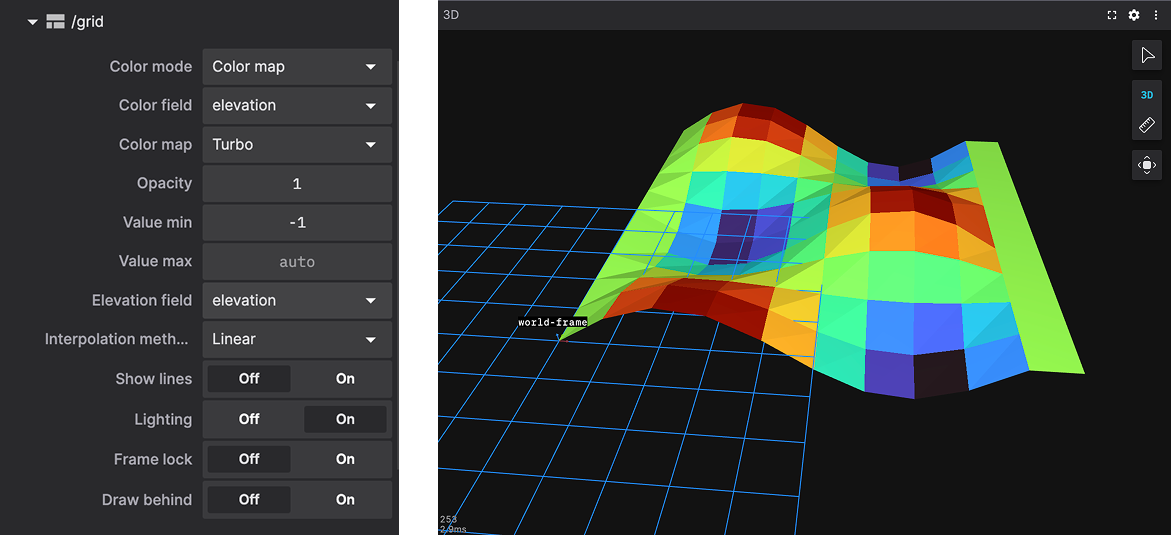

The Foxglove 3D panel now speaks the language of occupancy: voxel and 3D grids are supported natively.

This brings spatial perception into focus by rendering volumetric data as voxel-aligned cubes or layered maps. Whether you’re working on SLAM, 3D reconstruction, or autonomous exploration, having accurate, performant visual feedback on grid maps is essential—and now it’s native.

We also added map tiles to the 3D panel to visualize your robot's position and data with street, satellite, or custom maps layers. This enables geographical context for outdoor navigation, field testing, and autonomy development by allowing you to layer 2D and 3D information like point clouds and object detections directly onto a map.

Read more about Grids and Voxels here. See the Maps in 3D changelog here.

Robots don’t just see and move—they hear and transform. The new Audio Panel adds real-time waveform visualization and playback support to Foxglove. This is ideal for debugging voice recognition, telepresence, or any system where sound matters.

You can visualize foxglove.RawAudio messages directly in a layout, scrubbing through recordings or listening live. Audio is often the missing piece in retrospective investigations—and now it’s first-class.

Read more about the Audio panel here.

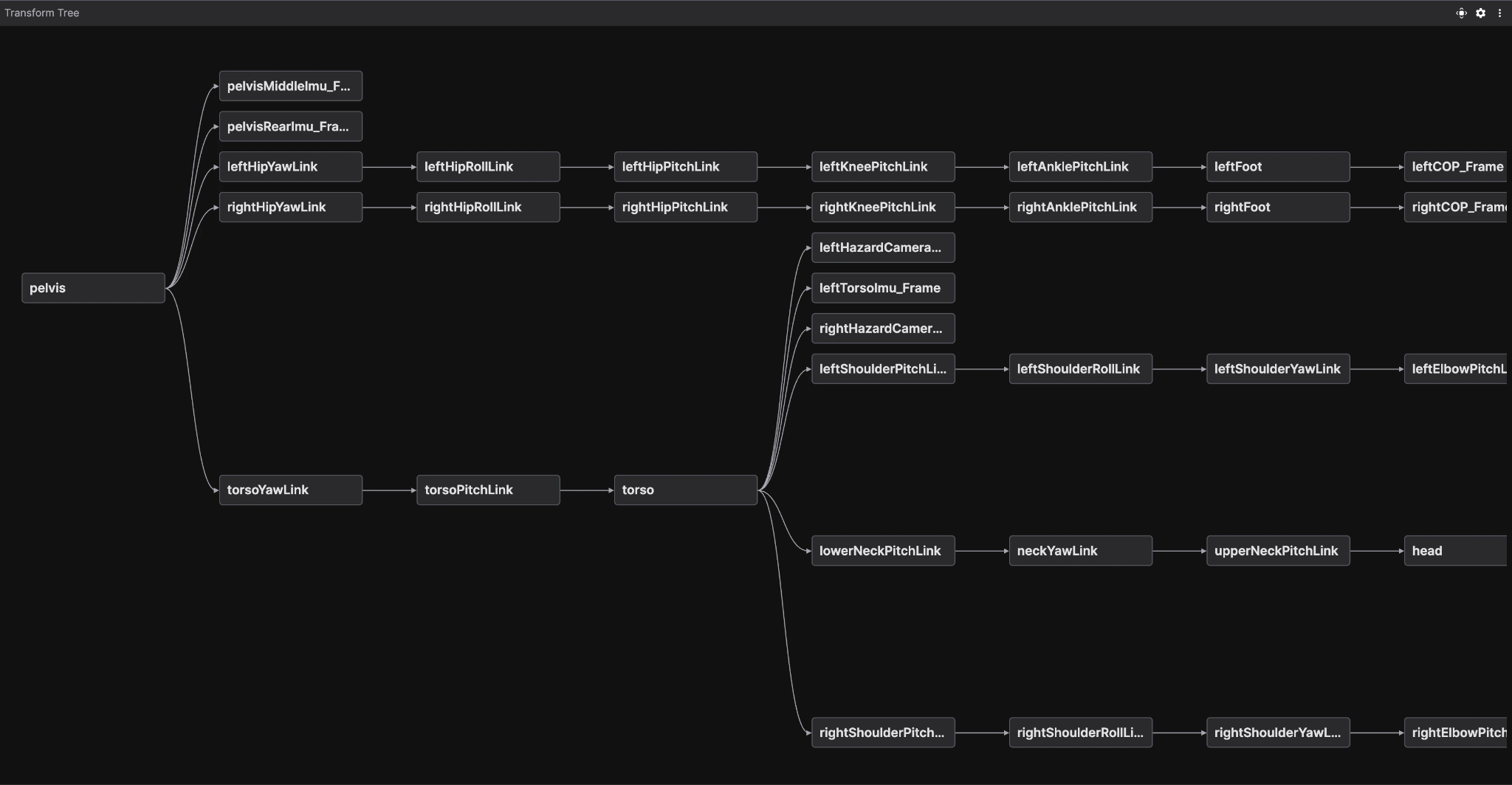

In parallel, the new Transform Tree panel gives you a real-time view into your robot’s coordinate frames. You can inspect the structure, follow frame hierarchies, and debug transforms without switching tools. This is invaluable when working on multi-sensor fusion, SLAM, or complex robot kinematics.

Check out the documentation to learn more and get started.

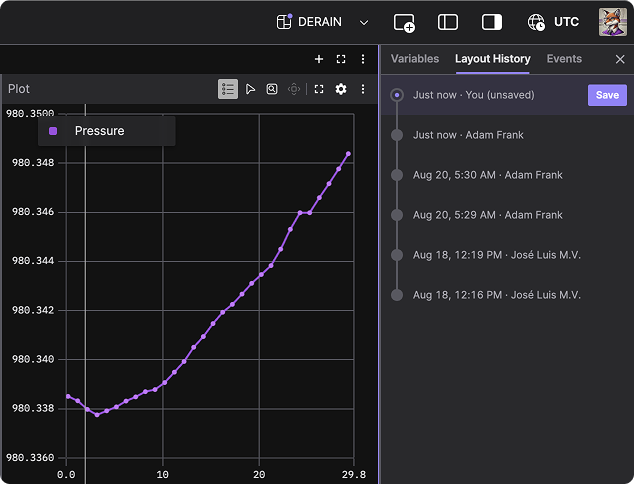

We’ve all been there—someone changes the layout right before a test, and now you’ve lost a known-good configuration. With Layout History and the Layout Manager, every saved layout is versioned, auditable, and restorable with a single click.

You can now organize layouts into folders, drag and drop them between teams, and confidently roll back changes. This isn’t just a UI tweak—it’s real tooling for managing visualization workflows at scale, across an organization.

Read more about Layout Manager and Layout history here.

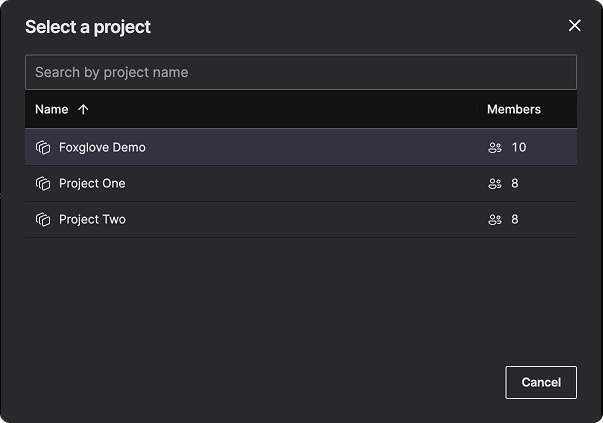

Foxglove’s new Projects feature brings structure to growing teams by introducing strong data isolation and access control. Every resource—recordings, devices, events—belongs to a Project. This ensures clean separation between dev and prod, between customers, or across teams.

Critically, recordings cannot be migrated between Projects. That may sound like a limitation, but it’s actually a feature—it provides strong guarantees about data provenance. If you’re managing compliance, audits, or incident response, this clarity becomes essential.

Read more about Projects here.

There’s a lot of new capability, but behind the scenes, Foxglove has also become much faster.

Recent updates to data loading, video buffering, the State Transitions and Plot panels, and much more have made it possible to load massive datasets, seek to precise windows, and scrub through visualizations without freezing your browser or waiting on long queries.

If you haven’t used Foxglove in a while, you’ll feel the difference immediately.

Read more about some of the performance improvements here.

Beyond the headline features, dozens of small but meaningful UX improvements have landed across the product. The Plot panel has matured into a core tool, with better data selection, more flexible timestamps, and tighter rendering. Navigation is smoother. Visual state feedback is richer. Everything just works, faster and better.

They’re part of a deliberate push to refine the experience as we work to build the most performant and comprehensive platform for developing Physical AI.

Whether you’re focused on perception, control, simulation, or full-system integration, Foxglove helps you understand your robots more deeply, debug faster, and build reliable autonomy with confidence.

View the changelog to see all updates and get started building autonomy with confidence today.